In academic circles, the word ‘consciousness’ used to be so frowned upon that professional researchers often didn’t even dare utter it, and would euphemise it instead calling it the ‘C-word’. These days, claiming to be a consciousness researcher has come to be a much more respectable job description. My own pathway through this intellectual landscape seems to have traversed the opposite trajectory. I started out being utterly mystified by the phenomenon and highly motivated to forge what new avenues there may be across this new frontier of science. But in the past few years, my interests have been shifting away from the exoticness of qualia and towards the algorithm of intelligence. This latter has started to seem to me to be much more of a ‘hard problem’ to solve, but crucially, one for which a solution seems conceivable. The problem of qualia, on the other hand, seems doomed from the start. From the outset, the problem involves explaining how a phenomenal state that is independent of the physical — or as a weaker claim, functional — mechanisms that are correlated with it could arise. But this seems like precisely the wrong way to go about the issue because it immediately sets qualia up as exotic states in need of an explanation, and makes the process of searching for a solution for the problem much like trying to square a circle.

It all starts with the zombie argument, of course. We are led to believe that zombies are conceivable, or ridiculed if we don’t. By a zombie what is meant is a non-conscious automaton that is nevertheless atom-for-atom identical with a conscious human. My immediate quarrel with this thought experiment is the following: the manner in which the scenario is portrayed automatically invokes the assumption that consciousness can in some sense be conceptually isolated from the regular normal functioning of a human being. We are asked to imagine this automaton, who despite being a physical replica of a human being and possessing a fully functional intact nervous system, and is moreover behaving in exactly the same manner as a human being would, is somehow not having experiences but is rather operating ‘in the dark’. But if I am to be completely intellectually honest about this, from an epistemological point of view it would seem that everyone who is not me must be a zombie. There is nothing to differentiate my imagining of the automaton from the way I experience everyday human beings that I interact with. I don’t know for sure if or when anybody in the whole wide world that I meet with is generating the sort of conscious experiences I know myself to be having. But on the other hand, I can comfortably make the assumption that I am not the only one with conscious experiences. I take this ‘leap of faith’, you could call it, precisely because of the epistemic barrier that is unbreachable under any circumstances. If this is granted, then how am I to even conceive of the being that is externally just like any other, but internally vacuous? I have no way of conceiving of it because the experiential domain of someone else is a totally empty notion for me. It is empty of content, and therefore, I must treat all beings that are externally, and thus physically, identical, as truly and completely identical in all respects.

Now if we accept that the conceivability of zombies is untenable, we have a few options for how to proceed. We can claim that P-states, or qualia, are indistinguishable from A-states, which entails the eliminativist view. Alternatively, we can retain the notion of qualia as non-physical states, but revoke their exoticness and rather attribute them to everything, as an essential complement to the physical, which entails the panpsychist view. So, under the first option, we are forced to accepting that a so-called zombie, who by definition is equipped with A-states, must therefore also have P-states, and thus cannot be a zombie. Under the second option, there can be no such construct as a zombie because merely being a physical entity necessitates a mental correlative. It seems to me that what is most interesting about consciousness at this junction is shared by both options, namely the question of what it is about physical states that enables the evident gradient of consciousness that we observe in the natural world. It is clear that my A-states are far richer and wider in scope than those of a sea slug. At the same time, the panpsychist has to admit that the lowest common denominator of mentality that accompanies anything physical must be susceptible to increasing levels of sophistication in a more interconnected physical object as compared to another. Though they would still attribute a mental realm to a rock, I would find it hard to posit that this would be of identical richness and scope to that of an orangutan.

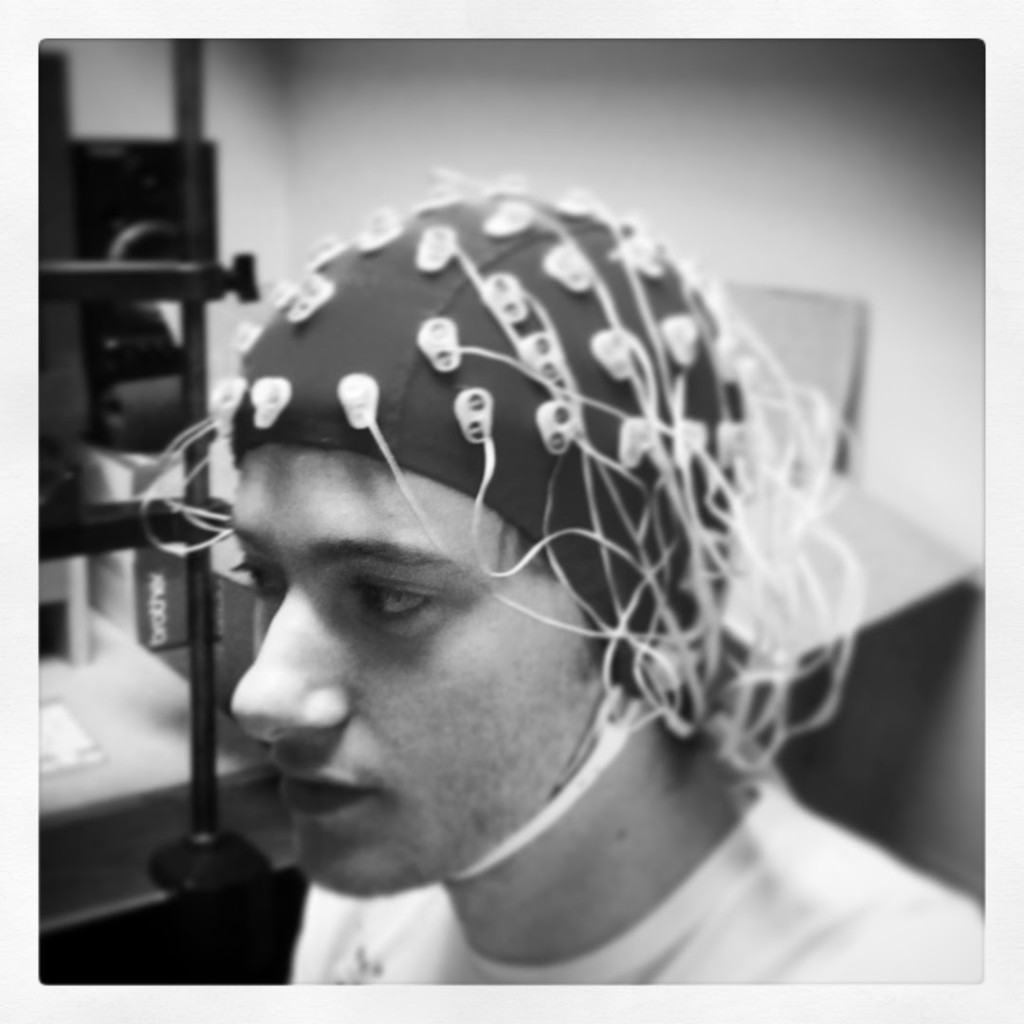

The question then becomes: what are the computational principles that underlie the increasing scope of an interconnected object? How does nature efficiently wire together trillions of neurons so as to bring the information they process together into a common stage. The two leading scientific theories of consciousness in modern times tackle this very question: how a Global Workspace (Dehaene) comes about, and how to Integrate Information (Tononi). Thus, the locus of the scientific pursuit is no longer metaphysical, which was thus unscientific and therefore futile, but algorithmic. We are searching for the code that we can run in silico to bring different information processing modules into cooperation with one another. Demis Hassabis has called for the renaming of the field of AI to AGI, which stands for artificial general intelligence, arguing that AI has already been achieved in isolated modules, citing impressive advances in facial recognition, natural language processing, and other remarkable feats of machine learning. The trick which remains uncracked is to create a platform upon which to utilize different specialized information processing subroutines whose outputs stream in using a common protocol to enable this fast and efficient communication between tasks. It is called general intelligence because it is flexible and can apply different modules at different times depending on what the task at hand requires. It seems to entail a sort of self-monitoring process, or a sort of meta-intelligence, insofar as it has knowledge regarding the various tasks it can perform and when to utilize them. In this regard, it comes close to the domain of metacognition, which investigates how we think about our thinking, and specifically an intriguing theory by a researcher in our department that claims that consciousness should be sought in the metacognitive confidence ratings that many psychophysical studies employ.